Last update: November 4, 2019

Smartphone users record front camera videos for very similar purposes as for still images. As with stills, there is an abundance of mobile apps for editing videos and applying filters and effects. Social media and messaging apps such as Facebook, Instagram, Snapchat, and WhatsApp all allow for sharing video clips as they do for sharing photos. However, in addition to sharing pre-recorded clips, many apps now also allow video live-streaming. Furthermore, people now frequently use front cameras for video calls with applications such as Skype or FaceTime.

Video versus still image testing

The use cases, methods, and equipment we use for our video image quality testing and evaluation are very similar to those for stills, too, so be sure to also read our article on “How we test smartphone front camera still image quality,” as it will provide you with useful background knowledge for this article.

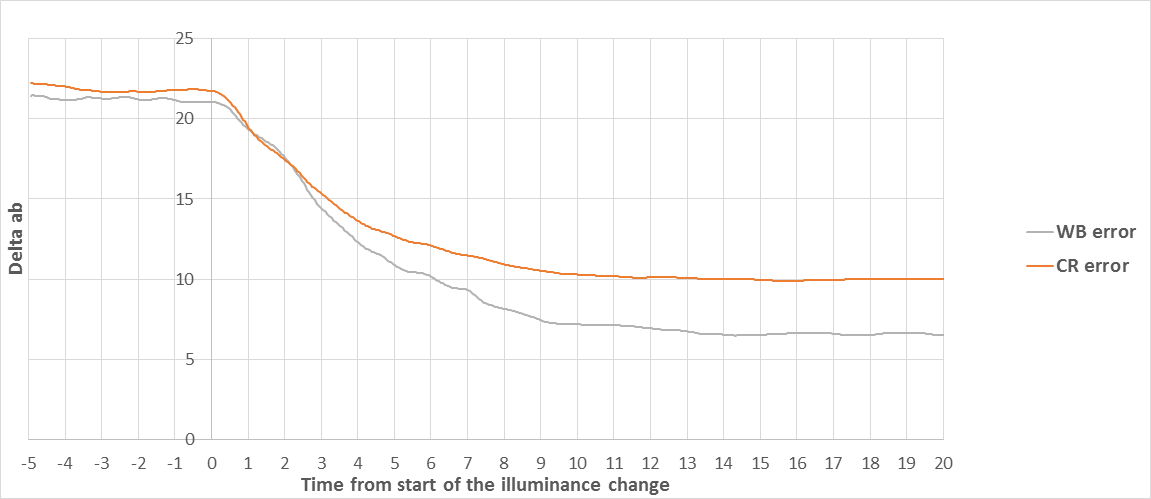

Despite the similarities, there are image quality aspects that are unique to video. Video stabilization is an important attribute that has a big impact on the overall quality of a video recording. The same is true for continuous focus, as an unstable autofocus can easily ruin an otherwise good clip. The most crucial difference between still image and video testing, however, is the addition of a temporal (time) dimension to most other image quality attributes. The tests for most static attributes that look only at a single frame of a video clip—for example, target exposure, dynamic range, or white balance—are pretty much identical to the equivalent tests for still images. However, a video clip is not a single static image, but is rather many frames that are recorded and played back in quick succession. We therefore also have to look at image quality from a temporal point of view—for example, how stable are video exposure and white balance in uniform light conditions? How fast and smooth are exposure or white balance transitions when light levels or sources change during recording?

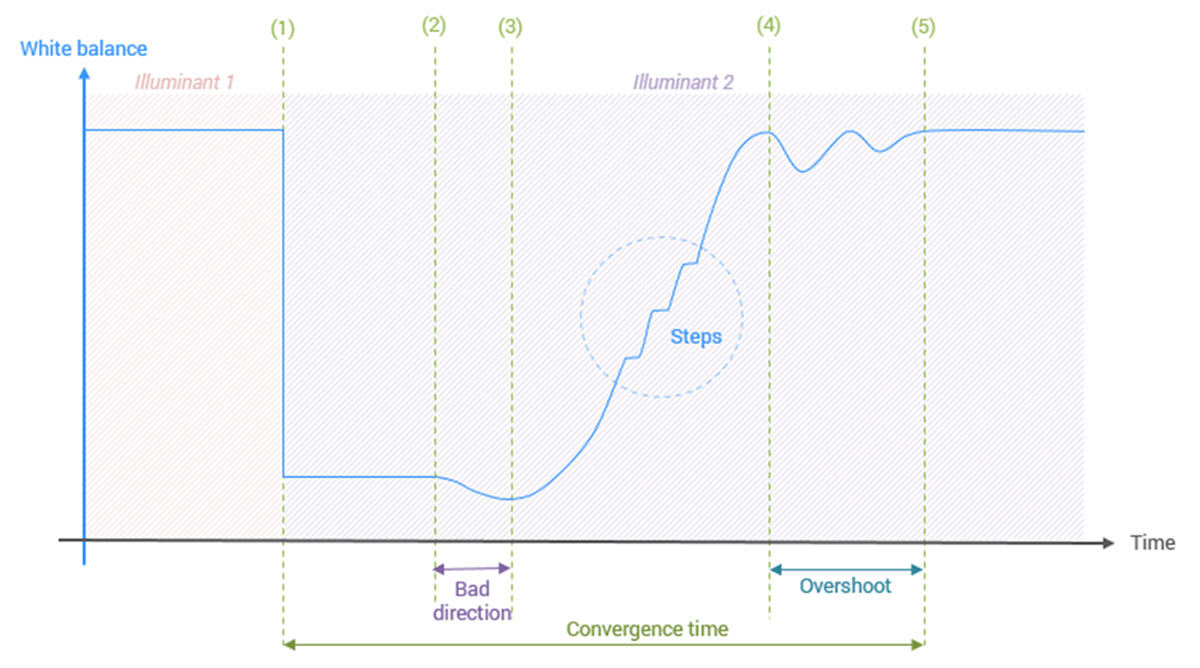

The example graph below shows the problems that can potentially occur when a camera’s auto white balance system has to react to a change of light source during recording: after the illuminant changes (1), the camera is slow to react; then it first corrects for white balance by going in the wrong direction (2); then the camera corrects in the right direction, but the curve shows some stepping (3). Once the camera has achieved correct white balance for the first time, the curve shows a lot of oscillation (4) before finally settling in (5). (Note that the graph below plots the camera’s behavior during a change of illuminant, but the equivalent exposure graph would actually look quite similar.)

Temporal testing attributes aside, other testing conditions and procedures are almost identical to those for still images. Our Selfie video testing protocol requires that we shoot several hours of video of test charts and scenes in the DXOMARK image quality laboratory, as well as a variety of indoor and outdoor “real-life” scenes. Light conditions include bright outdoor light and many types of artificial light sources in indoor conditions. Our studio test light levels range from a bright 1000 lux down to a very low 1 lux.

As with front camera stills, Selfie videos should be optimized for portraiture at close shooting distances. For video testing, we shoot at subject distances of 30cm and 50 cm, using single and multiple subjects of varying skin tones so as to reliably cover as many use cases as possible. Like the Photo Score, we calculate the DXOMARK Selfie Video score using both objective measurements and perceptual scores from a panel of image quality experts. We then condense objective and perceptual measurements into Video sub-scores, to which we apply our proprietary formulas and weighting systems to finally arrive at the Overall score.

It is also worth noting that for video testing we will manually select the resolution setting and frame rate that provides the best video quality, while for stills we always test at the default resolution. For example, a smartphone front camera might offer a 4K video mode but use 1080p Full-HD by default. In these circumstances we will select 4K resolution manually.

The DXOMARK Selfie Video sub-scores

This section describes in more detail the video attributes we test and analyze in order to compute our Selfie Video sub-scores.

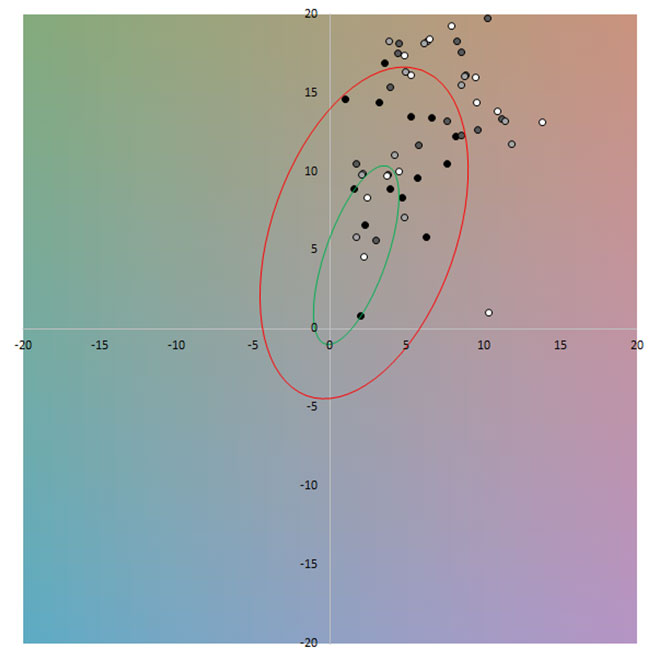

Exposure

Our Exposure score looks at target exposure, contrast, and dynamic range from both a static and temporal point of view. As with still images, we weight subject and background exposure differently depending on shooting distance. For closeups, the face is the central image element. With the camera further away from the subject, the background becomes more important. We undertake exposure tests in the lab at light levels from 1 to 1000 lux, using a range of test charts and equipment. In addition, we shoot outdoors on bright sunny days and indoors in our offices.

We compute the score using these objective lab measurements and the results of our perceptual analyses. Testing for static image attributes is pretty much the same as for still images, but given that some technologies, such as HDR, are only now becoming available for video, the results can be quite different. The static video quality attributes we test for Exposure are:

- Overall target exposure and repeatability

- Face target exposure and repeatability

- Face target exposure consistency in group shots

- Highlight clipping on faces

- Highlight and shadow detail in the background

As for stills, we also report if contrast is unusually high or low, but we don’t feed this information into the score, as contrast is mainly a matter of personal taste. As mentioned in the introduction above, we have to take temporal image quality attributes as well as static attributes into account when testing video. For Exposure, we test for the following temporal attributes:

- Convergence time and smoothness

- Oscillation time

- Overshoot

- Stability

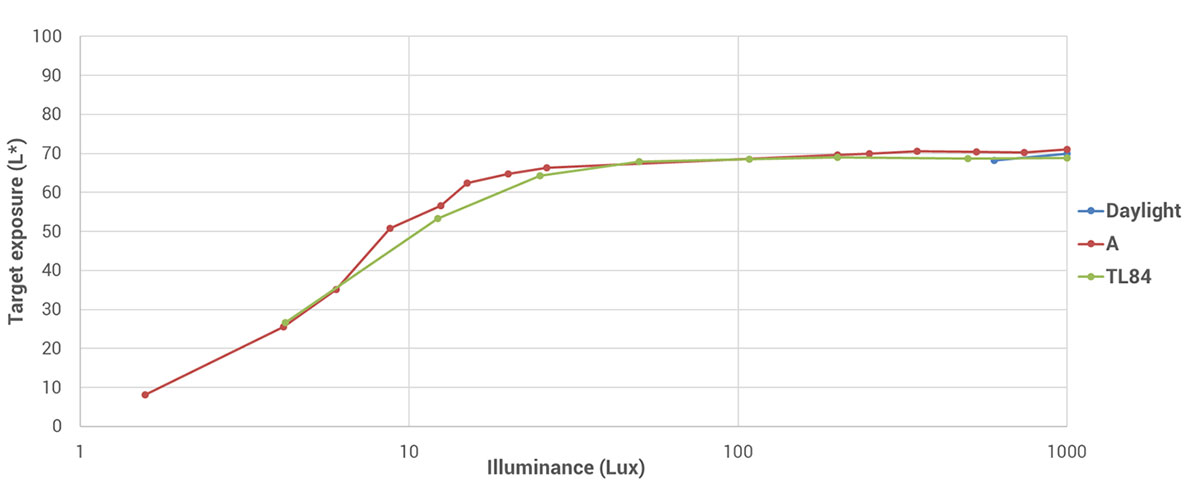

The sample graph below shows how the camera’s video exposure system reacts to changes in light conditions, ranging from fairly small steps (5 to 30 lux) to extreme changes in brightness (30 to 1000 lux).

To perceptually analyze exposure performance, our panel of image quality experts goes through a large number of sample clips and rates them according to the following criteria:

- Target exposure on faces and repeatability for two subject distances and several lighting conditions

- Saturation and clipping issues on faces

- Highlight and shadow detail in the background

- Exposure stability and smoothness when panning and walking while recording

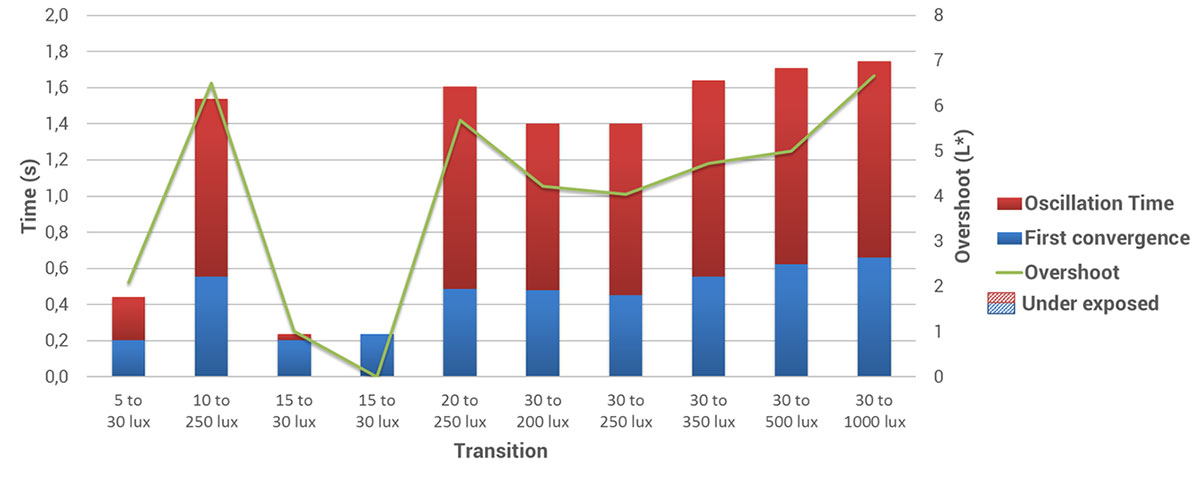

Color

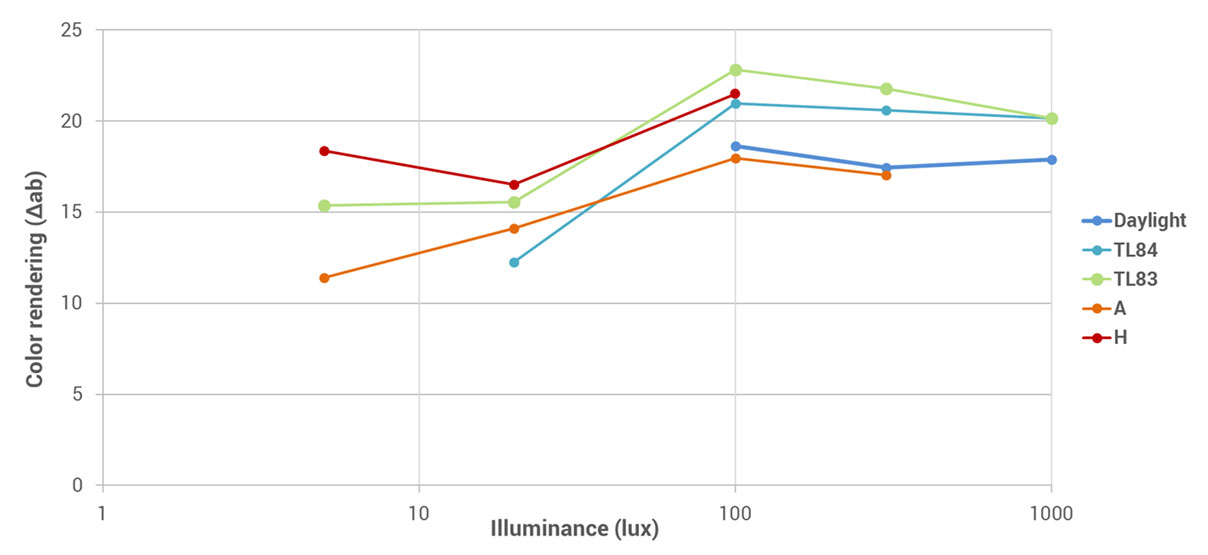

We test color in the lab at light levels from 1 to 1000 lux, using Gretag ColorChecker charts, our own proprietary DXOMARK chart, and a gray chart. We also shoot outside on sunny days and inside the DXOMARK offices. As for stills, we use our ellipsoid scoring system for white balance and color rendering.

Points within the red circle are still acceptable but score lower.

We compute the Color score from objective lab measurements and the results of our perceptual analysis. Static video quality attributes for Color are:

- White balance accuracy repeatability

- Color rendering accuracy and repeatability

- Skin tone color rendering in group shots

- Color shading

We also test the following temporal attributes under changing light conditions:

- White balance smoothness and stability

- Color rendering smoothness and stability

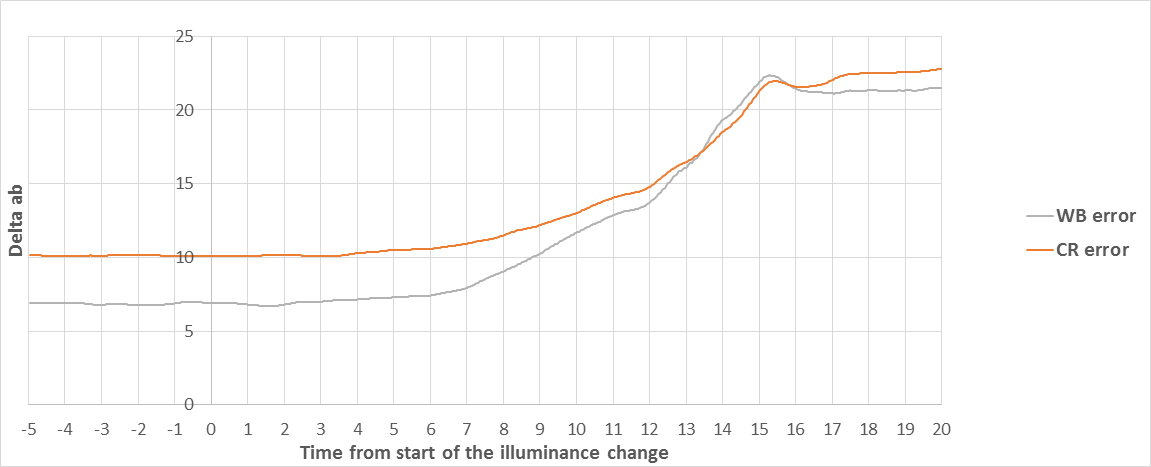

In the first section of this article, you saw a sample graph that illustrates the problems that can occur during a sudden change of light during video recording. The graphs below shows what happens during slower changes in lighting conditions. For this test, we slowly ramp the light level up from 20 lux to 300 lux, and in the process also slightly change the color temperature. We then do the same test in reverse.

In the left graph you can see a smooth white balance transition, without any sudden changes or other irregularities. In the right graph, the transition is pretty smooth as well, but there is a noticeable overshoot between 15 and 16 seconds. The white balance system overcompensates slightly and then readjusts.

For our perceptual analysis of Color, we check white balance accuracy and repeatability for 2 distances and several light sources, and use group portraits to check the rendering of different types of skin tones. In addition, we check our sample clips for color shading under several tungsten and fluorescent illuminants.

Focus

All our focus testing is perceptual in nature. We test front camera Focus at light levels of 10, 100 and 1000 lux in the lab; on bright sunny days outdoors; and indoors in the DXOMARK offices. For video, we test at subject distances of 30 and 55cm. Static attributes for Focus are:

- Focus range

- Depth of field

We evaluate depth of field by checking the detail in the background of portrait clips and the sharpness of subjects in group portraits which are not in the focal plane.

Focus Stability is the only temporal attribute we use in focus testing. At this point in time, not many smartphones come with an autofocus system in the front camera. AF systems can help achieve better sharpness, but can also cause problems in terms of stability while recording.

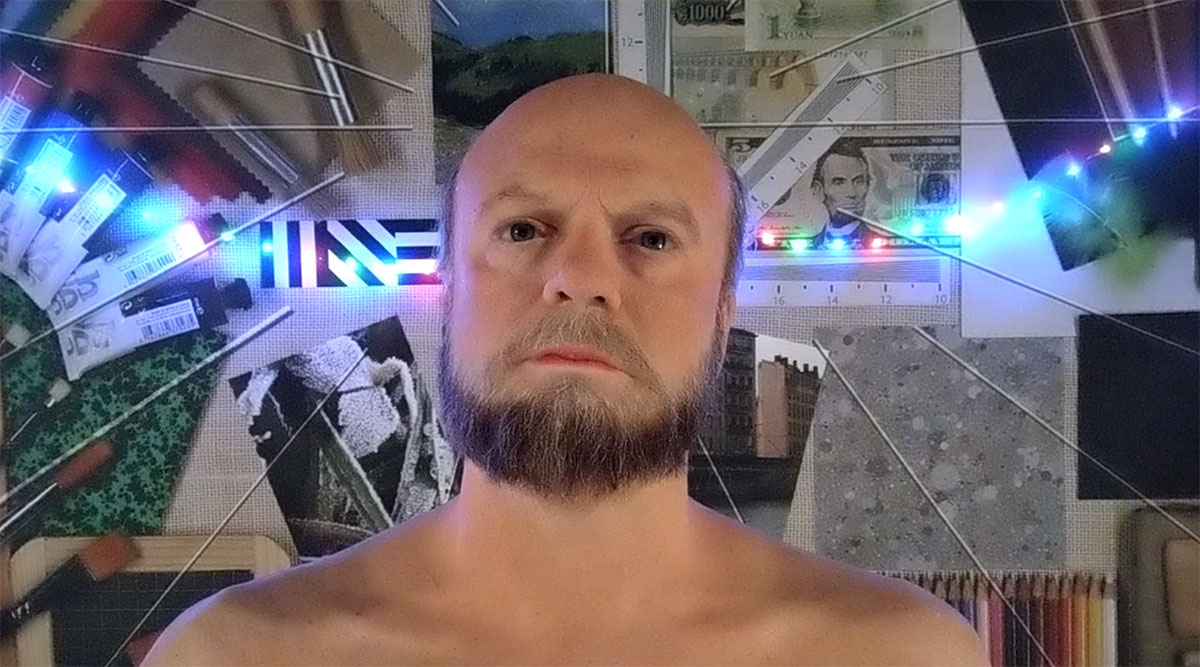

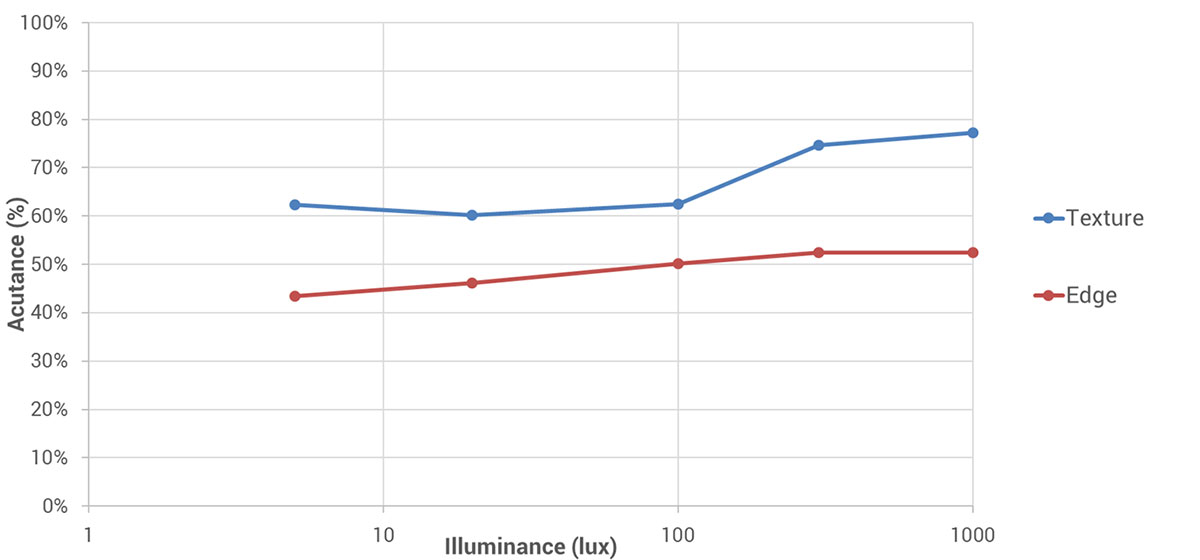

Texture and Noise

We test Texture and Noise in the lab at light levels from 1 to 1000 lux and under different light sources, using Dead Leaves charts and the same life-like mannequin we use in our still image testing. We also test outside on sunny days and inside the DXOMARK offices using human subjects. We do all our tests at subject distances of 30 and 50cm.

Texture and Noise test attributes:

- Texture and edge acutance and repeatability

- Visual and temporal noise levels and repeatability

We test texture and edge acutance in static scenes and in scenes with both camera and subject motion. It is important to test both scenarios, as frame-stacking algorithms can easily reduce noise in static scenes, but can often struggle to achieve the same results when some scene elements are in motion. To test the impact of camera shake on video texture and detail, we perceptually analyze footage shot during tripod movement as well as footage recorded handheld.

To perceptually analyze texture, we concentrate on the areas of the face that should always show good detail—for example, eyes, eyebrows, eyelashes, lips, and beard. To perceptually analyze noise, we look closely at the skin tones of our video subjects.

Artifacts

We look at the same set of artifacts for video testing as for still images, including the ones in the list below, but also keep our eyes open for any other type of video-specific artifacts we might come across.

- Sharpness in the field

- Lens shading

- Lateral chromatic aberration

- Distortion

- Perspective distortion on faces (anamorphosis)

- Color fringing

- Color quantization

- Flare

- Ghosting

Stabilization

Stabilization is just as important for front camera video as it is for main camera video. We test video stabilization by hand-holding the camera without motion and by shooting while walking at a subject distance of 30cm and at arms-length. For video calls–one of the most important use cases for front camera video—users typically hand-hold the device close to their faces (30 to 40 cm). For this kind of static video, the stabilization algorithms should counteract all hand-motion, but not react to any movements of the subject’s head.

For group selfie videos, we have to check that stabilization works well for all the faces in the frame. In the sample below, you can see that only the main subject’s head is well-stabilized, and that there is noticeable deformation on the other subjects in the video.

Walking with the camera is the most challenging use case for stabilization systems, as walking movement has a strong amplitude which requires much more heavy-handed stabilization than a static scene. Inefficient video stabilization in such cases can often result in intrusive deformation effects.

We hope you found this overview of our front camera video testing and evaluation useful. For an introduction to our new DXOMARK Selfie test protocol, an article on the evolution of the selfie, and even more information on how we test and evaluate front camera video quality, please click on the following links:

- Introducing the DXOMARK Selfie test protocol

- The evolution of the selfie

- DXOMARK Selfie: How we test smartphone front camera still image quality

English

English 中文

中文

DXOMARK invites our readership (you) to post comments on the articles on this website. Read more about our Comment Policy.